Your keynote speaker talked about digital transformation. So did the breakout session on operations. And the customer panel. And the innovation workshop.

But no one realized this was the dominant theme until three weeks after the event—too late to address it, amplify it, or build next year’s programming around it.

This is the problem with session-by-session event analysis. You see trees but miss the forest.

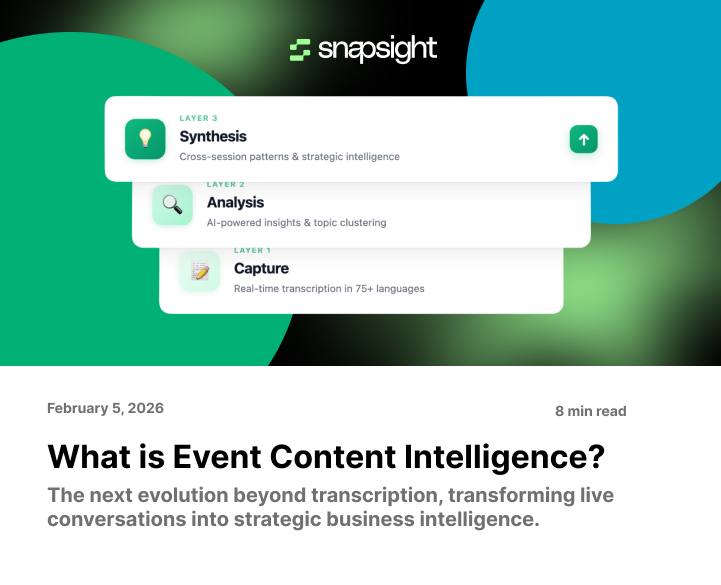

Cross-session event analysis changes this. By analyzing content across all sessions simultaneously, you discover patterns, contradictions, and insights that no single session reveals.

What is Cross-Session Event Analysis?

Cross-session event analysis examines content across multiple sessions to identify patterns, themes, contradictions, and trends that emerge when you view the event holistically rather than session-by-session.

Traditional approach:

- Session 1 analysis: Key takeaways from the keynote

- Session 2 analysis: Key takeaways from panel

- Session 3 analysis: Key takeaways from workshop

- Result: 30 separate summaries with no synthesis

Cross-session approach:

- All 30 sessions were analyzed together

- AI identifies “digital transformation” in 18 sessions

- Detects 3 contradicting viewpoints on implementation strategy

- Reveals regional differences (EMEA vs. APAC perspectives)

- Result: Strategic intelligence about what mattered most across the entire event

Why Cross-Session Analysis Matters

The Multi-Track Conference Challenge

Modern conferences run parallel sessions. ICCA Congress 2024: 125 parallel sessions. Tech Week Singapore: 500+ concurrent sessions. No human can attend everything.

Session-by-session analysis means:

- Attendees only learn from sessions they attended (10-15% of total content)

- Organizers review individual session feedback but miss event-wide patterns

- Strategic themes go unnoticed because they’re distributed across sessions

- Contradictions between speakers remain unresolved

Cross-session analysis solves this by processing all content simultaneously, revealing the full picture.

Real-World Example: ICCA Congress 2024

- Event: 1,000+ attendees, 125 parallel sessions, 12 languages

A session-by-session approach would provide:

- 125 individual session summaries

- Manual review required to find connections

- 40+ hours of staff time to analyze

- Insights delivered 2-3 weeks post-event

Cross-session analysis delivered:

- “Sustainability in convention centres” appeared in 18 sessions across different focus areas

- Three distinct approaches to hybrid event ROI measurement emerged

- Regional perspective differences: APAC prioritized technology, EMEA prioritized sustainability, Americas prioritized accessibility

- Executive briefing is generated the next day automatically

- Result: Association leadership identified member priorities they would have missed with a session-by-session review. Adjusted the strategic plan within days of the event.

5 Patterns You Can Detect

1. Recurring Themes (What Matters Most)

What it reveals: Topics appearing across multiple sessions—even when speakers use different terminology—indicate what your audience cares about most.

Example: Healthcare conference processed by Snapsight’s Analyst Agent:

- “Patient outcomes” appeared in 23 sessions

- “Value-based care” in 19 sessions

- “AI diagnostic tools” in 15 sessions

- “Staffing shortages” in 12 sessions

Actionable insight: Next year’s program should heavily feature patient outcomes (appeared most) and consider adding an AI diagnostics track (emerging interest).

2. Contradictions (Where Experts Disagree)

What it reveals: When speakers present contradicting information, attendees get confused. Cross-session analysis identifies these contradictions so you can address them.

Example: Corporate Sales Kickoff:

- Morning session: VP Sales said, “Prioritize enterprise accounts.”

- Afternoon breakout: Regional managers said “SMB showing highest growth.”

- Executive panel: CEO emphasized “mid-market is our sweet spot.”

Actionable insight: The leadership team realized mixed messaging was confusing sales teams. Held clarification session before event ended: “Enterprise for revenue, SMB for growth, mid-market for both—here’s how to prioritize.”

3. Regional Differences (How Perspectives Vary)

What it reveals: Global organizations discover how regional teams view the same challenge differently.

Example: Reuters global conference analysis:

- EMEA sessions: Privacy regulations dominated discussions (mentioned 34 times)

- APAC sessions: Mobile-first approach mentioned 28 times

- Americas sessions: Integration with legacy systems mentioned 31 times

Actionable insight: Product roadmap adjusted to address regional priorities. EMEA got enhanced privacy features, APAC got mobile optimization, Americas got an integration toolkit.

4. Emerging Trends (What’s Gaining Momentum)

What it reveals: Topics mentioned infrequently in early sessions but increasingly in later sessions indicate emerging trends.

Example: Tech conference Day 1-3 analysis:

- Day 1: “Agentic AI” mentioned 3 times

- Day 2: Mentioned 12 times

- Day 3: Mentioned 27 times, including the keynote and 4 panels

Actionable insight: Trend spotted in real-time. Conference organizers added a bonus session on Day 3 on “Implementing Agentic AI” due to demand. Became most-attended session.

5. Content Gaps (What Attendees Wanted But Didn’t Get)

What it reveals: Questions asked across multiple Q&A sessions but never fully addressed indicate content gaps.

Example: Association conference Q&A analysis:

- “How do we measure ROI?” was asked in 15 sessions

- Only 3 sessions directly addressed ROI measurement

- Gap identified: High demand, insufficient supply

Actionable insight: Next year’s program added a dedicated ROI measurement track. Post-event survey showed 92% satisfaction with this addition.

Technology Requirements

Not all event platforms can do cross-session analysis. Here’s what’s required:

1. Simultaneous Multi-Session Processing

The platform must process multiple sessions concurrently, not sequentially. Sequential processing can’t identify real-time trends.

Snapsight capability: Processes 50+ concurrent sessions simultaneously

2. Semantic Understanding (Not Just Keywords)

The platform must understand concepts, not just match keywords. “Digital transformation” and “tech modernization” are the same theme—AI must recognize this.

How it works: Natural language processing identifies semantic relationships between different terms and phrases.

3. Speaker Attribution

Cross-session analysis needs to know who said what. “3 speakers mentioned AI” is less useful than “CTO, VP Innovation, and Customer Success all mentioned AI—leadership alignment.”

What to look for: Speaker identification with role/title attribution

4. Temporal Analysis

The platform must track when themes appear. Did a topic emerge gradually or suddenly? Was it consistent or declining?

What to look for: Timeline visualization showing topic frequency over time

5. Cross-Lingual Analysis

Global events use multiple languages. The platform must analyze themes across language barriers.

Snapsight capability: 75+ languages analyzed together. Spanish speakers and Japanese speakers both discuss “sustainability”—a system connects them.

How to Implement for Your Next Event

Pre-Event: Set Up Intelligence Parameters

Define what to track:

- Key themes to monitor (from previous events)

- Competitor mentions to flag

- Product features to track

- Sentiment targets to measure

Configure platform:

- Train custom vocabulary (product names, industry terms)

- Set up speaker attribution rules

- Define cross-session analysis parameters

- Time required: 2-3 hours pre-event setup

During Event: Monitor Real-Time Intelligence

Live dashboard shows:

- Trending topics across all sessions

- Recurring themes appearing

- Sentiment tracking (positive/negative/neutral)

- Emerging contradictions flagged

Actionable responses:

- Spot trending topic → Add an impromptu session or panel

- Detect contradiction → Clarify in closing keynote

- Identify gap → Address in Q&A or follow-up content

Staff requirement: 1 person monitoring dashboard (10-15 min/hour)

Post-Event: Extract Strategic Intelligence

Automated outputs:

- Executive briefing with top patterns

- Cross-session trend report

- Competitive intelligence summary

- Content gap analysis for the next event

Manual review:

- Validate AI findings (30-60 minutes)

- Add strategic recommendations

- Share with leadership and planning teams

- Total time to insight: Next-day delivery vs. 2-3 weeks manual

ROI: What Cross-Session Analysis Delivers

Improved Event Programming:

- Data-driven topic selection (not guesswork)

- Better speaker alignment (address contradictions)

- Regional customization (acknowledge perspective differences)

- Result: Higher attendee satisfaction, better NPS

Faster Strategic Insights:

- Executive briefing next-day (not 3 weeks later)

- Real-time course correction during the event

- Immediate competitive intelligence

- Result: Timely decisions while momentum exists

Content Marketing Multiplication:

- Identify best content for repurposing (most-discussed topics)

- Create theme-based content series (recurring patterns)

- Regional content customization (perspective differences)

- Result: 3-5x more leads from repurposed content

Institutional Knowledge Capture:

- Year-over-year trend analysis (themes evolving)

- Best practices database (solutions that worked)

- Expertise mapping (who knows what)

- Result: Permanent, searchable organizational memory

The Strategic Advantage

Organizations still doing session-by-session analysis are flying blind. They see individual pieces but miss the strategic picture.

Cross-session analysis reveals:

- What your audience truly cares about (not what you think they care about)

- Where your messaging is inconsistent (and confusing customers)

- How perspectives differ across regions (and how to address each)

- What trends are emerging (before competitors notice)

- What content gaps exist (opportunities for next time)

This intelligence drives better event programming, faster strategic decisions, and higher event ROI.

The technology exists. The ROI is proven. The competitive advantage goes to organizations that adopt first.

Your next conference will generate dozens or hundreds of sessions. Will you analyze them one-by-one, or discover the patterns that only cross-session analysis reveals?